Summary

What we’re building: A comprehensive new course portfolio based on defence-in-depth - covering everything from technical alignment to societal resilience.

How we’ll do it: Hiring the best domain experts to design and run each track (starting with AI Governance - applications are now closed!)

When it launches: Late 2025, rolling out over the following year

Why now: o3 showed us the pace of AI progress had shifted. After interviewing 50+ experts, we’re designing something that matches what’s actually needed

A quick history of BlueDot

We founded BlueDot in 2022 running the world’s largest courses on AI Alignment. By late 2024 we had expanded our portfolio to include AI Governance and Biosecurity.

In total, we’ve trained ~5,000 people across technical AI safety, AI policy, and biosecurity. Around 1,000 graduates now work in high-impact jobs at organisations including Anthropic, the UK AI Security Institute, and Google DeepMind.

Over the years, we’ve built slick infrastructure to run cohort-based learning at scale, a wonderful community of learners and facilitators, and an awesome team.

Time for something bigger

When OpenAI announced o3 last December, it smashed benchmarks in coding, maths and general reasoning to a degree that caught even experts off guard. We’d entered a new paradigm of reasoning models and it was clear that things weren’t going to slow down.

We took a hard look at our courses and realised they weren’t comprehensive enough for what’s coming. The field needed something bigger.

So we conducted 50+ interviews with experts across the AI safety community and read a stack of publicly available literature on AI safety strategy. What we found shaped our new approach.

What we learnt from 50+ AI safety experts

Conversations with the AI Safety experts covered:

How soon they expect AI capable enough to pose major risks

How costly it will be to keep these systems under control

How quickly frontier capabilities will spread to bad actors

Whether AI will make it easier to attack or defend society

How much evidence governments need before they’ll act

Suggested strategies ranged from centralised governmental control over AI training and deployment (if people were particularly concerned about bad actors), to fully decentralised AI training whilst building societal resilience (if people were primarily concerned about concentration of power).

For more details, read our blogpost and register interest for our upcoming AGI Strategy Course!

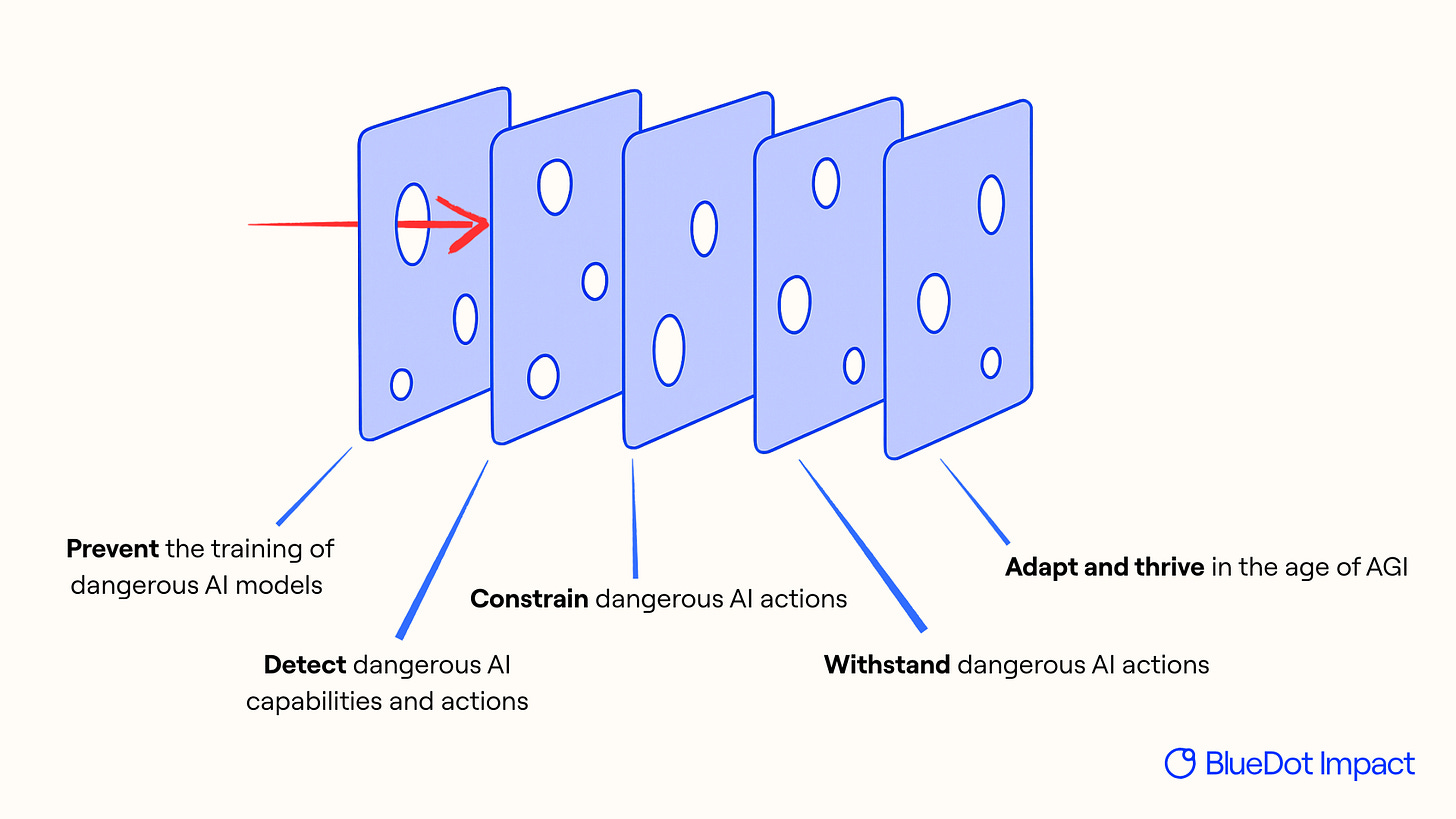

A defence-in-depth framework for AI

Defence-in-depth emerged as a smart approach for this stage of our AI Safety work. It is a method to help safely harness powerful technologies. The core principle is having multiple independent defensive layers, so when one fails, others are there to back it up.

Modern nuclear reactors use it: containment vessels, backup cooling systems, control rod fail-safes.

Aviation uses it: pilot training, co-pilot verification, mechanical redundancies, air traffic control, maintenance protocols.

No single point of failure can bring down the system. These layers of protection let us unlock enormous benefits while limiting the risk of major disaster.

We believe that we need defence-in-depth for AI, and that the following five layers are one useful way of categorising most AI safety interventions:

The defence-in-depth model: Like Swiss cheese, each layer has vulnerabilities (holes), but by stacking independent layers, we ensure threats can’t pass straight through.

Prevent the training of dangerous AI models

Stop unacceptably dangerous AI systems from being built by rogue, hostile or unsafe actors

Example interventions: Compute governance, domestic regulation, international treaties

Detect dangerous AI capabilities and actions

Identify what AI systems can do before they cause harm

Example interventions: Capability evaluations, interpretability research, model auditing

Constrain dangerous AI actions

Keep AI systems under control even if they have dangerous capabilities

Example interventions: Alignment and control techniques, robustness and jailbreak defence

Withstand dangerous AI actions

Build resilience against AI-enabled threats across society

Example interventions: Biosecurity and cybersecurity hardening, crisis response systems

Adapt and thrive in the age of AGI

Navigate societal transformation while ensuring broad prosperity

Example interventions: Economic policy reform, governance innovation, redefining human purpose (no biggie)

Note: People will disagree fiercely about where to set the threshold for “dangerous” in each layer – there are real tradeoffs to being too heavy-handed. Most would agree we shouldn’t prevent training today’s frontier LLMs, AND that we don’t want anyone training massively superhuman AI with no guardrails. The debate shouldn’t be whether we need these defensive layers - it’s how thick each one should be.

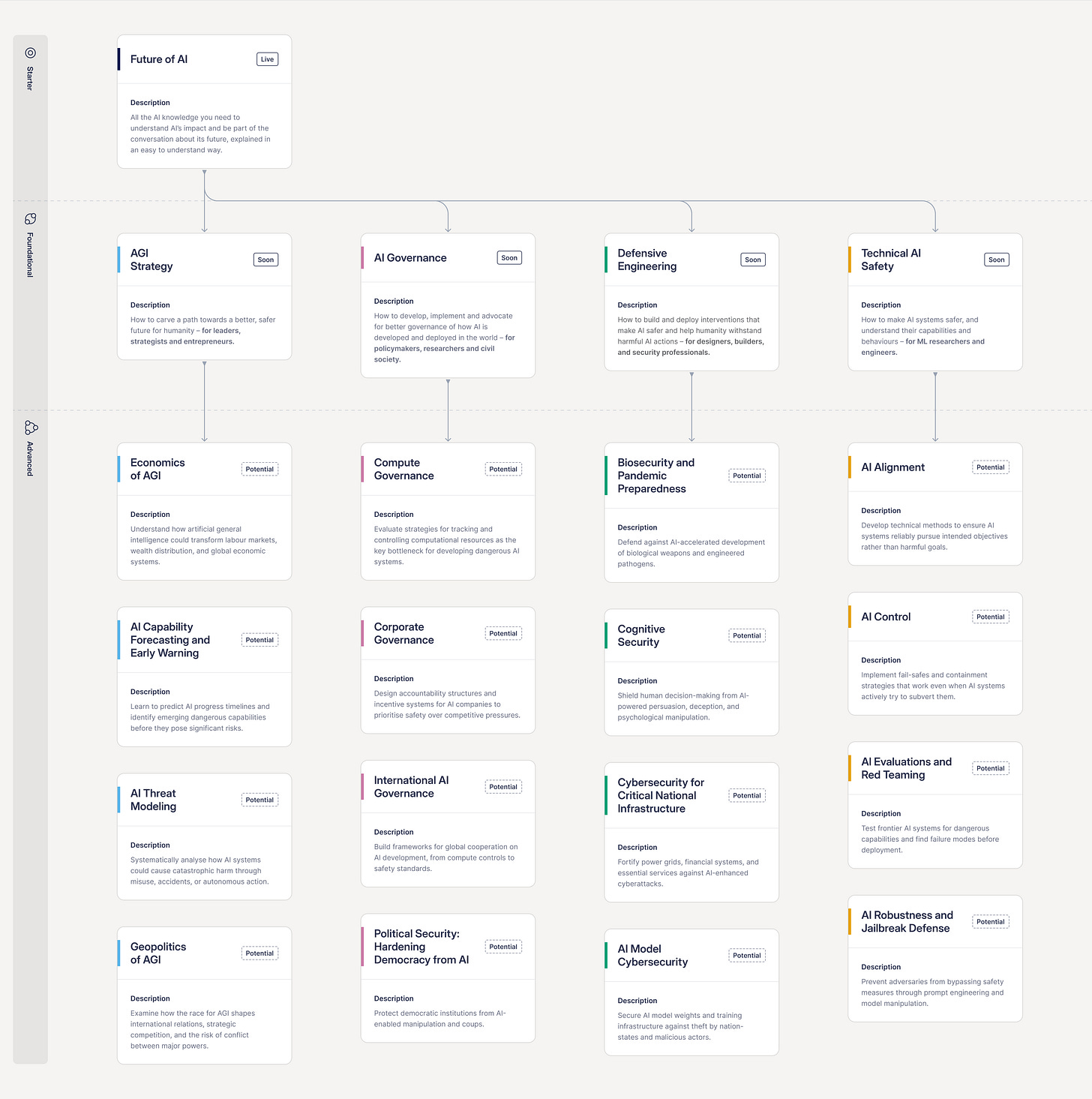

Our vision for a new course portfolio

We believe defence-in-depth is a smart way to approach this challenge because it accepts that some safeguards will fail. We need many different types of people building interventions across every layer - because we don’t know which ones will hold.

The scale is huge. Each defensive layer needs dozens of specialisations, and we are building courses to address each defensive layer:

Prevent: Treaty negotiators, chip security experts, policy designers

Detect: Interpretability researchers, capability evaluators, compliance auditors

Constrain: Control engineers, alignment researchers, red-teamers

Withstand: Biosecurity experts, cybersecurity specialists, crisis responders

Adapt: Economists, governance researchers, philosophers

We need to build an entire defensive workforce, not a handful of AI safety researchers.

You can see a high-quality PDF here.

The portfolio has three levels:

Level 1: Starter

Future of AI - A 2-hour introduction to AI’s current capabilities and trajectory. Open to everyone.

Level 2: Foundational. Four parallel tracks that equip a range of people to work across the defensive layers:

AGI Strategy - For leaders and entrepreneurs navigating the path to beneficial AI

AI Governance - For policymakers shaping how AI is governed at corporate, national and international levels

Defensive Engineering - For builders creating resilient infrastructure and response systems

Technical AI Safety - For ML engineers working on making AI systems safer and understanding their capabilities

Note: These domains cut across the defensive layers. Governance is required for all 5 layers. Technical AI safety is required for at least ‘detect’ and ‘constrain’.

Level 3: Advanced. Intensive programs tackling specific challenges within each domain. The exact courses will be shaped by the specialists we hire and the field’s most pressing needs.

We’re launching Level 2 courses first, rolling out the full portfolio over the next 12 months.

How you can contribute

We’re looking for experts who build. If you’ve thought deeply about AI governance, technical safety, AGI strategy or defensive engineering AND you’re a builder who gets things done, we want to hear from you.

What you’ll actually do. As a BlueDot specialist, you’ll run the entire pipeline from identifying critical needs to getting skilled people working on them:

Identify gaps in your domain - what’s blocking progress in AI safety?

Recruit exceptional people who can fill those gaps

Train them through courses you design with world-leading experts

Place them in roles where they’ll have real impact

Previous BlueDot specialists have leveraged their roles to become field leaders:

Luke Drago (Governance Specialist) co-authored The Intelligence Curse (exploring AGI economic transitions), wrote for Time magazine, and co-founded Workshop Labs.

Adam Jones (Technical AI Specialist) advised the UK Government on AI safety, and now works on safety at Anthropic.

Within 12 months, this could be you.