Summary

In May 2025, we ran a short bootcamp with the intention of helping great operations people land and excel at operations roles within AI safety organisations. As many of these organisations are struggling to hire enough quality candidates, BlueDot aims to “bring these candidates into existence”, by finding people with great operations experience and skilling them up by teaching them how to:

Talk confidently about core AI safety concepts

Explain the role of key organisations in the global AIS ecosystem

Leverage AI tools to improve their productivity

Apply their understanding to practical operations challenges

Evaluate their fit for ops roles in AIS organisations

The course received overwhelmingly positive feedback on our website (86% 5-star ratings as of 2 June) with participants particularly valuing the practical exercises, diverse group discussions, and up-to-date content.

The Bootcamp also successfully achieved its objective of motivating operations professionals to apply for AI safety roles, with nine participants explicitly stating they will apply.

The main markers of success for BlueDot will be:

The number of course participants that secure roles in UK AISI, one of the key promoted destinations of the course.

Whether graduates go on to work in AI safety in ~3 months after the course.

There were key areas of feedback we received on the Bootcamp, which will be discussed in more detail later. Some include:

Define what “operations” means more clearly and what the day-to-day work may look like in AI safety orgs, to ensure participants are clear about what operations roles entail.

Explain why we focus on AGI risks as opposed to current risks.

Extend the discussion windows during the sessions to allow for more in-depth conversation, or alternatively restrict the amount of scenarios discussed.

Brainstorm adding exercises that allow participants to demonstrate more operational agency and big picture thinking, in addition to using AI tools, to help with more operational upskilling.

Marketing and outreach:

The bootcamp was organised under short timelines and outreach played a key role in making sure we found great participants on short notice.

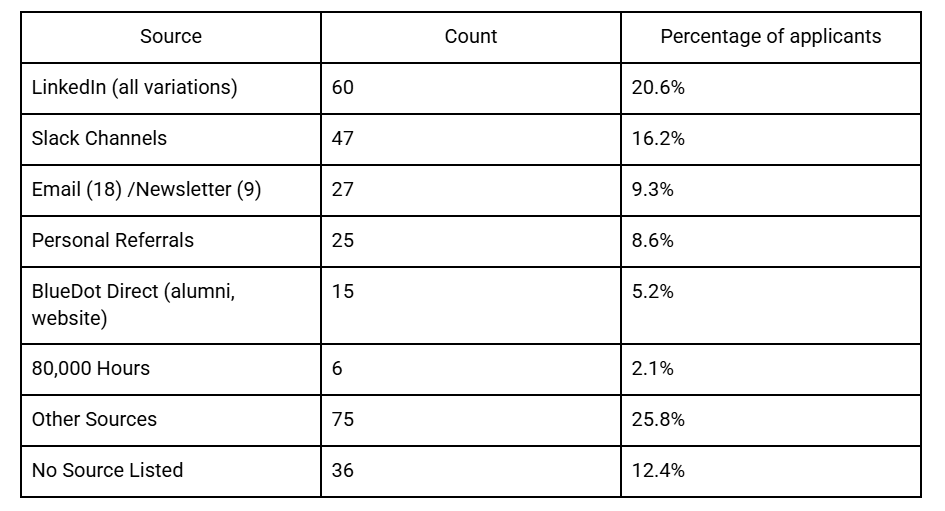

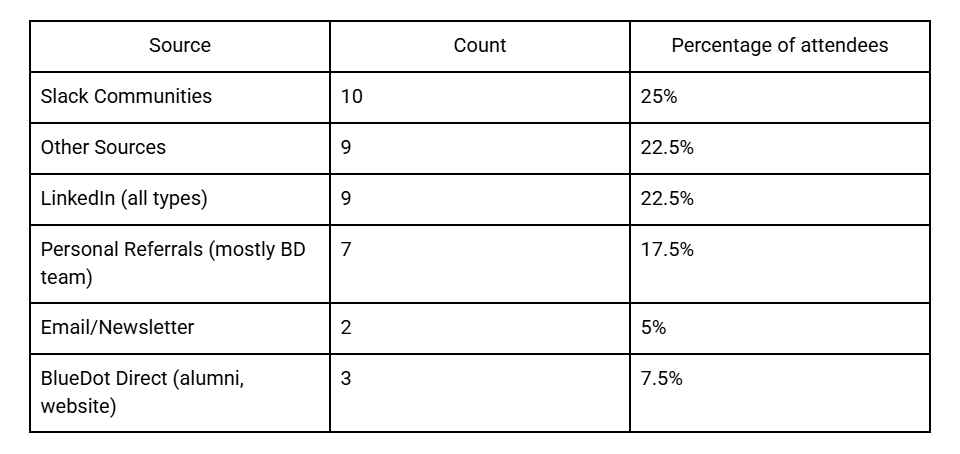

We made posts on various platforms and slack channels, and identified suitable candidates from databases like BlueDot course alumni or High Impact Professionals. Below are two tables that show the success of different forms of outreach. The first table shows the number of people who applied, and the second table shows the people who were accepted into the course.

All applications

Accepted attendees

There are a number of lessons from this data that can help make outreach better for next time:

Many applications did not list any source - make this a mandatory field in the application for next time, to improve our outreach methods.

Create a uniform dropdown list of source platforms, with the option to include extra notes to e.g. detail specific slack channels / people who personally reached out.

The general takeaways for outreach are:

LinkedIn and Slack channels seem like the best tools to recruit participants.

Personal outreach from BlueDot members found some great candidates, but was very time consuming in comparison.

We also received helpful feedback from one participant about how to improve the application form, noting:

The lack of acknowledgement email

A pop-up that said the candidate would hear back on Monday despite applying later (now rectified)

No option for filling out extra detail on the form

The expected fill-out time was too short compared to reality

Course feedback

IT issues

Multiple participants experienced technical issues with the course page. Some feedback included:

Loss of progress:

When opening the course through the BlueDot website, they lost their progress and were redirected to the beginning of the course, causing friction in picking up where they left off.

No file access (now rectified):

Not having access to the final exercise longer in advance, as the person did not have time during the work week to complete it.

Issues with submitting feedback:

Not always being able to submit feedback on the course website.

Resources

Participants rated the resources from Unit 1 highly, particularly appreciating how up-to-date the content was, that it was easy to digest and provided great context for non-experts, and that it made them consider the proximity AGI and its implications more seriously.

Multiple people appreciated the scenario in Chapter 4, which showed what it would look like if different entities controlled AGI.

Feedback for improvement that came from multiple participants included:

Providing more detail on the variety of operations roles in AI safety and what their day-to-day entails.

Ensuring there are clear learning objectives and outcomes; that the goal of the course is clear.

Individual participants also suggested:

Adding more content about the current state of AI regulation.

Adding more information about different AI models (supervised, unsupervised) and typical use cases that can be seen in different sectors.

Showing what guardrails are in place for certain models at the moment and how they differ by company.

Adding more information about game theory implications of great power conflict in the context of AI.

Including cases with current AI risks and what is currently being done to address them.

Including more technical overview of AI safety; requested by some people who already had a strong context of AI safety.

Adding more context for Vending-Bench evaluation.

Multiple people also raised the issue that Unit 2 took them significantly longer to complete than Unit 1. As a result, they did not manage to complete all the exercises in Unit 2 before the session, and felt that the units were not balanced well.

Discussions

Participants praised the value of in-person discussions and appreciated how the diversity of the group showed them different perspectives and helped to facilitate meaningful discussions. The situational activities in Session 1 were seen as interesting, helpful, and on occasion “eye-opening” as it inspired a feeling of urgency and dread to resolve them.

Some constructive feedback included:

Discussing a longer timeline for crisis scenario preparation (like 2 years).

Focusing on operational problems we could face today - e.g. a new LLM model is released and within weeks it’s used to create highly persuasive election misinformation campaigns in multiple countries.

Being asked to brainstorm the questions before the session, so they can write down their thoughts and spend less tim in the session “rush” writing.

Many have said that allocating more time to the discussion exercises would make them more valuable, as they were often cut short due to time constraints.

Some feedback was:

Have 3 rather than 2 sessions to allow more in-depth conversation.

Have at least extra 30 minutes to discuss the scenarios.

Go deeper into just 1 scenario, instead of having 2.

For Session 2, many participants complained that the discussion on the Unit 2 practical exercise was too long and should have been 10 minutes instead. Besides wanting to talk about different topics, it was noted that not everyone was able to finish the exercise in time and therefore talk about it for 20 minutes.

Practical exercise

Many participants noted that they learn by doing and appreciated the value of putting what they have learned into practice - using AI tools for operations tasks. Eight participants noted that this exercise made them more comfortable using AI tools to help with productivity or taught them about tools they were not aware of.

One participant especially appreciated using LLM to simulate a manager and provide feedback on their work.

Another participant would welcome an additional, optional, operations exercise to further hone their skills.

There were a couple participants who were already familiar with using AI tools and did not feel like they benefitted that much from the exercise; they were hoping to learn about what would make them better operators in general.

Operations focus

Some participants were a little confused about what operations roles actually entail and what the day-to-day work would look like in AIS organisations. Therefore, they were not sure whether their skills actually match these roles.

Some feedback included:

Include the definition of operations.

Show how operations roles may differ based on the size / focus of the organisation.

Include some realistic operations problems / tasks that ops people in AIS organisations would work on.

On the other hand, participants with more operations experience said that while the exercise in Unit 2 was interesting, it was not fully representative of what operations actually entail. They specifically noted that Ops requires “big picture thinking” and questioning why things are done the way they are done, to make sure processes are improved. Some suggested that it would be helpful to add an exercise that demonstrates more agency or shows independent, big-picture thinking.

One participant noted that they hoped for more operations upskilling to get an edge over other people, especially those who were already using AI tools in their workflow.

Course follow-up

One participant noted that they really appreciated the call to action at the end of the course, including the resource detailing the roles that are currently open.

Nine people indicated that they are strongly motivated, or will, apply to roles in AI safety.

Others noted that they are excited about the field, but have uncertainty about their fit / knowledge, or what skills hiring managers are actually looking for. They hinted they could use guidance from BlueDot or their course alumni on how to secure these roles and become more competitive candidates; including getting advice on how to refine their applications or being offered a referral. Someone suggested it would be helpful to be directed to resources where they can continue their learning and upskilling.

One candidate noted that after completing the course, they don’t feel like they have substantial competitive advantage over other good candidates for AI safety roles; they suggested that the course could benefit from being longer and focus more on operations upskilling.

General positive feedback

“It has made me more determined to apply for operations roles in AI safety and also gave me a more positive mindset about what can be achieved with the help of LLMs and others.”

“The acceleration of AI capabilities in both the reading material and discussion re-callibrated me to prioritise joining an AIS org more urgently rather than [some specific non AIS options]. Going through exercises 2-9 in unit 2 made me a lot more confident I’m a competitive candidate for AIS operations roles.”

“The bootcamp introduced me to ops roles and I realised they might be an ideal fit for me, combining my ops skills with my interest and experience in AI safety. I’ll definitely be actively exploring similar roles going forward!”