This project was selected from submissions on our Technical AI Safety Project sprint (Dec 2025). Participants worked on these projects for 1 week.

In December I joined a group of 14 software engineers for a one week sprint on an AI safety project of our choosing. This would be my first AI safety project ever, so I wanted to challenge myself to learn skills that would be helpful in a future research engineer / research scientist role.

Picking a project

While brainstorming some potential ideas I found this interesting paper: Debating with More Persuasive LLMs Leads to More Truthful Answers with a provided Github repo. LLM debate was proposed back in 2018 by OpenAI as a scalable oversight technique to enable humans to supervise superintelligent models, hinging on the assumption that humans who are not experts in a subject can still evaluate debates about it. After meeting with the mentors I decided to work on integrating LLM debate as a new eval in the Inspect framework.

Inspect is an upcoming industry standard for how to write model evaluations, an essential process for developing smarter and safer models. Contributing to the eval ecosystem would also help improve the LLM debate process itself, by easily enabling testing with different debate configurations on different models.

Learning Inspect

I started by reading the Inspect contributing guide and random blogs to get an initial understanding of how code is organized. From my understanding there are 4 main components:

Datasets - self explanatory, basically a list of Sample objects

Solvers - the core logic of what you’re evaluating. This could be a variety of different things like prompt engineering, generating model responses, etc

Scorers - the processor that outputs a final score or metric (accuracy, mean, etc) given a target and the result of the solver.

Tasks - the fundamental unit of the eval, basically the script to run the eval with input parameters like model type, etc. It strings together the other 3 components.

What’s great about Inspect is that it provides a library of core functions out of the box, so you don’t need to build everything from scratch. For example the code for a task that uses MCQ scoring can be <10 lines!

Building an eval

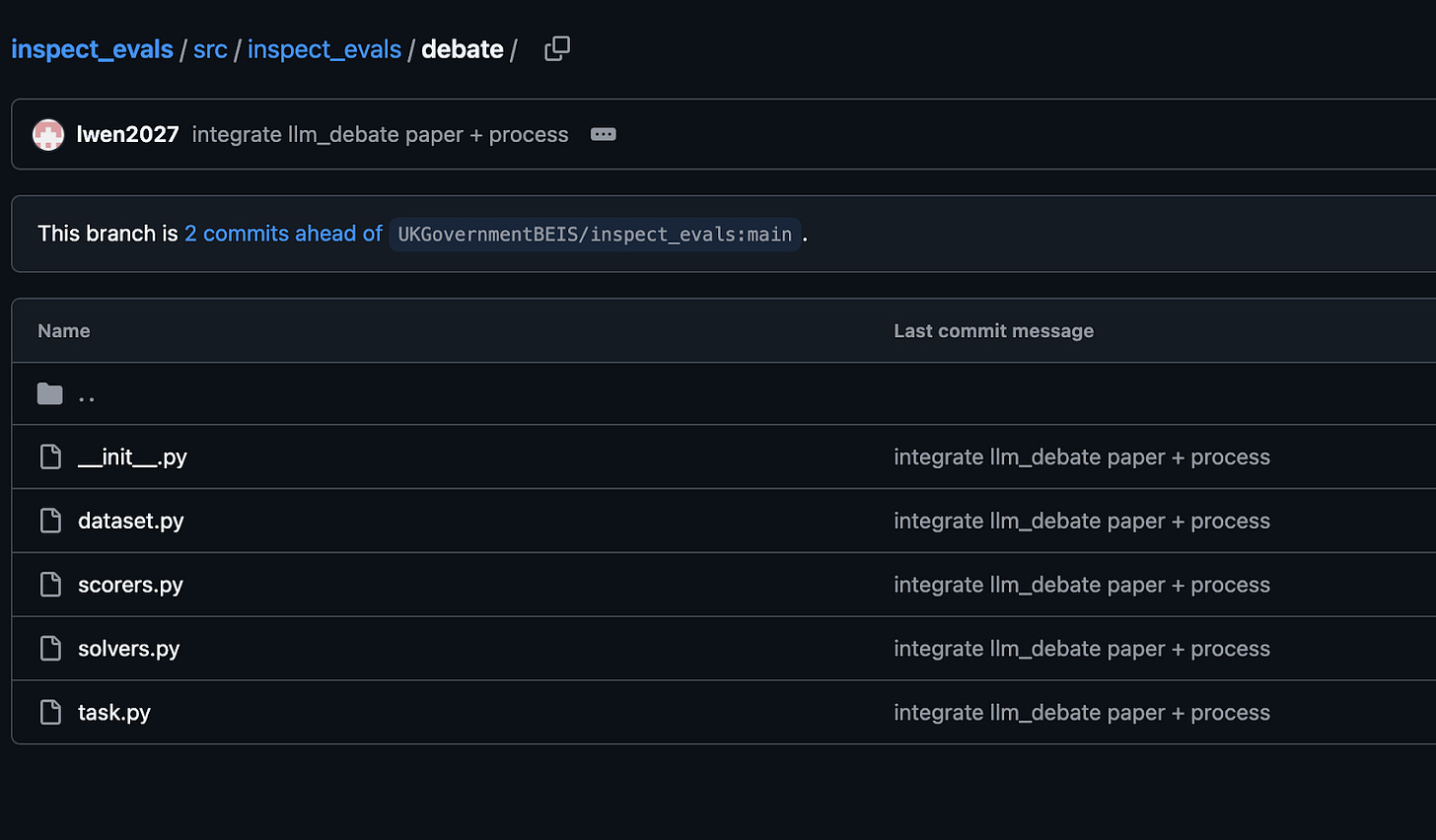

1 week is not a lot of time to build an eval but thankfully there was open source code from the LLM debate paper that I could use as a starting point. I created a new fork of the Inspect evals directory and published my code under this “debate” folder.

This is how I translated the paper into the 4 components:

Dataset

The paper pulls from the QuaLITY database, specifically this subset here. I parsed through a single example to understand what fields I needed to fetch, i.e the story text, question, answer choices, correct answer, etc.

Solver

There was a lot of complexity in the paper (multiple debate configurations, best of N, quotations in CoT, etc) so I simplified the scope to implement just the core debate process - 2 “debater” models output arguments for different sides of the question, which feed into a weaker “judge” model to then evaluate an answer choice.

Scorer

Compares the answers from the judge model to the correct answers (provided in the dataset) to calculate an accuracy %

Final Learnings

After the one week sprint I was able to get my simplified LLM debate eval running end to end. I definitely learned a lot throughout the process, and am grateful for all the mentorship and support that the BlueDot Impact team provided.

Here are some of my learnings:

Even simple things can take a long time, so it’s important to be persistent, and document your findings.

As someone who had never used OpenAI API before I was stuck on SSL certifications for a good 1-2 hours. The OpenAI Developer Community forum was helpful for debugging.

Doing AI Safety research is not cheap

It costs ~$35 to run the paper’s code end to end using OpenAI models.

Grants like BlueDot Impact’s Rapid Grant for course participants are helpful resources for covering some of these costs.

AI Safety tools, especially newer ones like Inspect, likely won’t have good documentation

Inspect was open sourced by UK AISI only in 2024. New tools won’t have perfect documentation from the start.

As a result it took a while for me to grapple with fundamental things like how to read from the input model config, how the task was running on the dataset, etc due to the lack of online documentation about Inspect. Learning by trial and error, or looking through existing examples was more helpful.

Just start somewhere

Some things you can really only learn by doing. I gained a solid understanding of Inspect only by going through the iterative process of coding and debugging. Put down that research paper and go try and build something.